I've been hearing a lot of buzz about Manus AI lately, especially claims that it's beating GPT-4 in real-world performance tests and redefining automation and productivity. As someone who tests AI tools regularly for ToolJunction, I had to dig deeper and see what all the fuss is about with this so-called "best AI code agent."

What is Manus AI?

Manus AI is a next-generation autonomous AI agent built to turn human intent directly into action. Unlike typical chatbots that only generate replies, Manus AI independently carries out tasks across different domains — from workflow automation to complex decision-making — with minimal human input.

By combining Large Language Models (LLMs), multi-modal capabilities, and integrated tool systems, it offers a seamless, end-to-end task execution experience.

What Manus AI Claims to Do

According to their website (manus.im), Manus AI isn't just another chatbot or traditional AI assistant. They're positioning it as an "autonomous AI agent" that can actually complete tasks from start to finish. The pitch is pretty compelling – instead of just getting suggestions, you get actual work done. They claim to be bridging the gap between human thoughts and actions through advanced AI automation.

Here's what caught my attention in their feature list:

- Full task execution – They claim it can write reports, create spreadsheets, analyze data, plan travel itineraries, and handle file processing without you having to babysit every step. This autonomous task execution is what sets it apart from standard AI assistants.

- Advanced tool integration – This is where it gets interesting. Manus AI supposedly connects with web browsers, code editors, database management systems, and other external tools to actually interact with your existing software stack. This tool invocation capability could be a game-changer for workflow automation.

- Multi-modal capabilities – It handles text, images, and code all in one interface, processing and generating multiple types of data, which could be a huge time-saver for content generation and analysis.

- Asynchronous execution – Here's something different – they say tasks can continue running even when your device is off. If true, that's pretty impressive for business process automation.

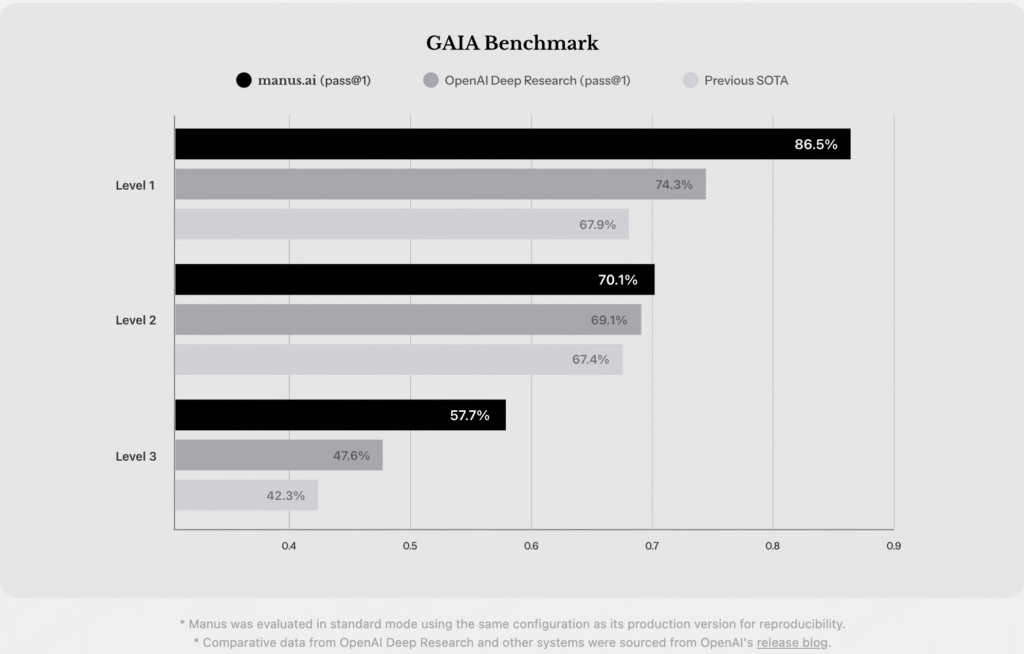

The GAIA Benchmark Performance Claims

Now, this is where I got really curious. Manus AI claims to have achieved state-of-the-art (SOTA) performance on the GAIA benchmark, which is a big deal if it's accurate. The GAIA benchmark isn't some made-up test – it's developed by Meta AI, Hugging Face, and the AutoGPT team to measure how well AI agents handle real-world tasks, testing logical reasoning, multi-modal processing, and external tool usage.

The current leaderboard shows H2O.ai‘s h2oGPTe Agent at the top with 65% accuracy. Manus AI claims they're doing even better than that, which would put them ahead of major AI models including:

- OpenAI's GPT-4o (32% on GAIA)

- Google's Langfun (49%)

- Microsoft's o1 model (38%)

- OpenAI GPT-4 Plugins (15-30%)

I have to say, if these GAIA benchmark accuracy numbers are legit, that's genuinely impressive for what appears to be a newer company competing with OpenAI and other AI leaders.

My Initial Impressions

The Good:

The concept is solid. We definitely need AI that can execute tasks, not just talk about them. The advanced tool integration angle is particularly appealing – I'm tired of copying and pasting between different AI tools and my actual work applications.

The GAIA benchmark performance, if accurate, suggests this isn't just marketing hype. GAIA tests practical problem-solving and real-world utility, which is exactly what we need more of in AI tools for productivity enhancement.

The adaptive learning and optimization features could mean the AI gets better at understanding your specific workflow over time, which traditional AI assistants lack.

The Questions: I'm curious about the actual user experience. High benchmark scores are great, but how does it feel to use day-to-day? The interface, reliability, and ease of setup matter just as much as raw performance.

Pricing and availability seem unclear from their website. For a tool this powerful, I'd expect enterprise-level pricing, but they don't make it obvious who can actually access it right now.

Who Should Pay Attention

If you're dealing with repetitive tasks that involve multiple tools and data sources, Manus AI could be worth watching. This autonomous AI agent seems especially relevant for:

- Business analysts who spend time gathering data from different sources and need automated data analysis

- Project managers juggling multiple workflows and seeking workflow automation solutions

- Content creators who need to research, write, and format across platforms for content generation

- Developers who want AI that can actually interact with their development environment for software development tasks

- Anyone looking to streamline decision-making processes through AI-powered automation

Frequently Asked Questions About Manus AI

- What is Manus AI used for? Manus AI helps automate tasks, analyze data, create content, and make decisions — all aimed at boosting productivity.

- How is Manus AI different from GPT-4? While GPT-4 mainly offers suggestions or responses, Manus AI goes further by independently executing tasks, making it a more capable and autonomous assistant.

- What is the GAIA benchmark? The GAIA benchmark measures how well AI agents handle real-world tasks, focusing on reasoning, tool usage, and automation. Manus AI is reported to outperform many current AI models on this benchmark.

- Who created Manus AI? Manus AI was developed by Monica.im, a Chinese AI company specializing in next-generation autonomous AI agents.

- Can businesses use Manus AI? Absolutely. Manus AI is well-suited for business use cases like workflow automation, process optimization, and data-driven decision-making.

- Is Manus AI publicly available? Manus AI can be accessed through manus.im. Availability may vary depending on user location and business integration needs.

Conclusion

Manus AI appears to be tackling a real problem – the gap between AI that can think and AI that can actually do.

The benchmark performance is encouraging, and the feature set addresses genuine pain points I see in current AI tools.

However, I'm taking a "wait and see" approach. Performance claims are one thing, but real-world usability is another. I'm planning to get hands-on access as soon as possible to test whether the experience lives up to the promises.

For now, it's definitely on my radar as one of the more interesting AI developments this year. If they can deliver on even half of what they're promising, it could change how we think about AI assistants.

Have you tried Manus AI? I'd love to hear about your experience in the comments below.

This review is based on publicly available information and company claims. I'll update with hands-on testing results once I get access to the platform.

About the Reviewer: This tool has been reviewed by our AI tool expert at ToolJunction. Connect with our team on LinkedIn for more insights on the latest AI tools and automation solutions.