The Rise of Free Open Source LLMs: AI has rapidly evolved from a specialized area of study to a tool used on a daily basis by companies, scholars, and artists. Even though demand is rising, the high price and closed nature of proprietary models like Anthropic's Claude and OpenAI's GPT-4 continue to be major obstacles to adoption.

Free open source LLMs can help with that. These models can be installed locally or on cloud infrastructure, and they are freely available to the public. They frequently compete with proprietary models, making them strong OpenAI substitutes for individuals, businesses, and startups.

Table of Contents

What Are Free Open Source LLMs?

An AI system that has been trained on billions of words to produce text, respond to queries, and support sophisticated reasoning is known as a large language model (LLM). Although many LLMs are proprietary, more and more are becoming open source, allowing the community to access the underlying architecture and weights.

"Free open source LLMs" refers to:

- The model weights are openly accessible.

- There is no licensing costs associated with their use.

- Depending on the license type, developers are free to tweak, alter, or use them.

Well-known examples include Falcon 180B, Mistral 7B, and Meta's LLaMA 3, each of which offers a unique trade-off between accuracy, efficiency, and size.

Why Use Free Open Source LLMs Instead of Proprietary Models?

There are three primary reasons why free open source LLMs are becoming more and more popular:

- Savings on expenses: At scale, proprietary APIs can be costly. Costs can be significantly decreased by running an open model locally or in your own cloud.

- Data Control & Privacy: There are risks to compliance when sending private data to outside vendors. You have control over where your data is processed when using open-source models.

- Adaptability and Personalization: Open LLMs have an advantage over "one-size-fits-all" commercial models since they can be tailored for domain-specific tasks like legal, healthcare, or education.

Because of these benefits, free open source LLMs are a viable choice for solo creators who value independence, businesses that need compliance, and startups that need affordability.

Quick Comparison: Free & Open-Source LLMs

| Model | Pricing | Best Use Case | Best For |

|---|---|---|---|

| LLaMA 3 (Meta) | Free (open weights) | Cutting-edge assistants, research | Enterprises, Research Labs |

| Mistral 7B | Free (Apache 2.0 license) | Lightweight, cost-effective inference | Startups, Solo Devs |

| Mixtral 8×7B | Free (open weights) | High-performance MoE for scalability | Enterprises, AI Startups |

| Falcon 180B | Free (Apache 2.0) | Enterprise-grade deployment | Enterprises |

| Gemma 2 (Google) | Free | Balanced model for production pipelines | Startups, Data Teams |

| Command R+ (Cohere) | Free (open weights) | Retrieval-augmented generation, reasoning | Enterprise AI Teams |

| Phi-3 (Microsoft) | Free | Small, efficient models for local apps | Solo Creators, Startups |

Here Are the 7 Best Free Open Source LLMs

These seven top free open source LLMs in 2025 are exceptional if you're searching for strong OpenAI substitutes that are affordable and adaptable. From enterprise-grade architectures designed for large-scale deployment to lightweight models that operate locally, each of them has special advantages.

1. LLaMA 3 by Meta

Overview

Meta’s LLaMA 3 family is widely regarded as the most advanced open-weight model available. Released in 2024, it includes multiple versions such as 8B and 70B parameters. LLaMA 3 has been benchmarked against GPT-3.5 and frequently comes out ahead in reasoning, summarization, and general conversational quality.

Features

- Multiple parameter sizes (8B, 70B, with larger versions expected).

- Strong performance across reasoning, summarization, and coding.

- Large community adoption with plenty of fine-tuned variants on Hugging Face.

Pricing

- Free to download and use.

- Infrastructure costs depend on model size (the 70B version requires GPUs with 80GB VRAM or clusters).

Pros

- State-of-the-art among open models.

- Large ecosystem of support and tools.

- Extensible for research and product development.

Cons

- Large models require significant compute resources.

- Safety and alignment tools are less mature than GPT-4.

Best For

Enterprises, advanced startups, and research labs looking for maximum performance from open-source models.

2. Mistral 7B

Overview

Mistral 7B has become one of the most popular choices for startups and solo developers. Despite its relatively small size, it offers impressive performance thanks to careful architecture design.

Features

- Compact 7B parameter size, yet competitive with larger models.

- Optimized for long context handling.

- Released under Apache 2.0, which is commercial-friendly.

Pricing

- Completely free and permissively licensed.

- Runs efficiently on consumer GPUs with quantization.

Pros

- Lightweight and fast for deployment.

- Easy to integrate into existing workflows.

- Minimal hardware requirements compared to larger LLMs.

Cons

- Cannot match 70B+ models for complex reasoning.

- Limited performance in multi-step problem solving.

Best For

Solo developers, indie creators, and early-stage startups that want a powerful model without large infrastructure costs.

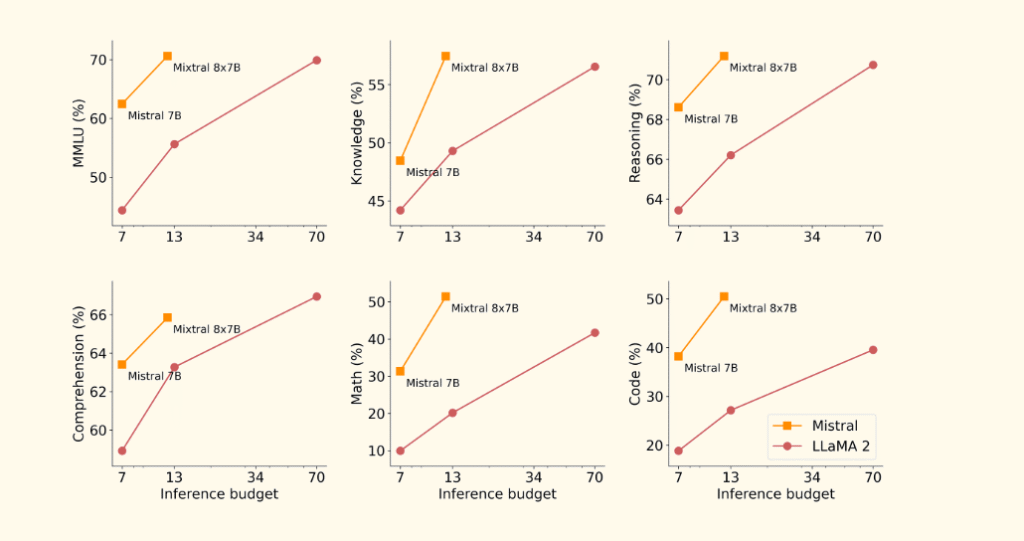

3. Mixtral 8×7B

Overview

Mixtral 8×7B is a Mixture of Experts (MoE) model that balances efficiency with power. Unlike traditional dense models, it activates only part of the network for each query, which saves compute while still providing high performance.

Features

- 8 experts, each 7B parameters, with only 2 active per input.

- Competitive with GPT-3.5 and often surpasses LLaMA 2.

- Excellent for reasoning and multilingual tasks.

Pricing

- Free to download and use.

- Requires more advanced infrastructure for MoE serving.

Pros

- Extremely strong performance for its size.

- Efficient due to MoE design.

- Widely adopted and community-supported.

Cons

- Deployment is more complex than simple dense models.

- Needs specialized serving pipelines for best results.

Best For

Startups and enterprises building scalable AI applications where both performance and cost-efficiency are important.

4. Falcon 180B

Overview

The Falcon 180B model, developed by the Technology Innovation Institute (TII) in the UAE, was the first truly massive open-source LLM. With 180 billion parameters, it is one of the largest models available to the public.

Features

- 180B parameter size, extremely powerful.

- Released under Apache 2.0 license.

- Strong results in text generation and summarization.

Pricing

- Free to use.

- Requires clusters of GPUs, making it expensive to run privately.

Pros

- Enterprise-grade scale.

- Fully open for commercial use.

- Demonstrates open-source competitiveness at the very largest scale.

Cons

- Impractical for small teams due to infrastructure costs.

- Difficult to fine-tune without high-end resources.

Best For

Large enterprises and research institutions with access to multi-GPU clusters.

5. Gemma 2 by Google

Overview

Google’s Gemma 2 is an open-weight sibling of Gemini, built to balance performance with usability. It has quickly gained traction as a reliable option for startups.

Features

- Multiple sizes optimized for different use cases.

- Good multilingual capabilities.

- Integrates well with Google’s cloud ecosystem.

Pricing

- Free to download.

- Some license terms vary, but generally allows commercial use.

Pros

- Backed by Google’s ecosystem.

- Balanced between size and performance.

- Readily deployable for production.

Cons

- Ecosystem is still newer compared to LLaMA or Mistral.

- Not the top performer in raw benchmarks.

Best For

Startups and data teams looking for a stable, Google-supported option.

6. Command R+ by Cohere

Overview

Command R+ is optimized for retrieval-augmented generation (RAG), making it especially useful in enterprise knowledge management and customer service applications.

Features

- Specialized for reasoning-heavy and retrieval tasks.

- Strong at integrating with external knowledge bases.

- Available as open weights, with hosted services for ease of use.

Pricing

- Free to download and use.

- Hosted options available via Cohere.

Pros

- Tailored for enterprise applications.

- Strong in accuracy when paired with RAG pipelines.

- Flexible deployment options.

Cons

- Less versatile than general-purpose LLMs.

- Best performance requires RAG integration.

Best For

Enterprise AI teams that need factually accurate, reasoning-driven applications.

7. Phi-3 by Microsoft

Overview

Microsoft’s Phi-3 is designed to be efficient, small, and accessible. It provides good performance while remaining easy to run on modest hardware.

Features

- Small parameter sizes, highly efficient.

- Optimized for coding and reasoning tasks.

- Can run on consumer-grade GPUs or CPUs with quantization.

Pricing

- Free to use.

- Very low infrastructure costs.

Pros

- Excellent efficiency.

- Practical for local deployment.

- Strong performance relative to size.

Cons

- Not as powerful as larger models like LLaMA 3.

- Limited ecosystem compared to Mistral or Meta models.

Best For

Solo creators, hobbyists, and small startups experimenting with AI locally.

FAQ: Free Open Source LLMs

Which open free source LLMs is best for startups?

Startups benefit from models that balance cost and performance. Mistral 7B and Phi-3 are the most practical for small teams with limited hardware.

Which open-source model is closest to GPT-4?

Currently, LLaMA 3 (70B) and Mixtral 8×7B are the closest open alternatives, often matching or beating GPT-3.5.

Can these models be used commercially?

Yes, most are released under permissive licenses such as Apache 2.0. Always double-check specific license terms before deploying commercially.

How can I run these models locally?

Use frameworks like Ollama, LM Studio, or llama.cpp with quantized weights to run models on consumer hardware.

What is the cheapest way to deploy an open LLM?

Deploy a 7B parameter model with quantization on a single GPU. For larger workloads, consider hosted inference services on Hugging Face or cloud platforms.

Conclusion

Artificial intelligence is now more competitive thanks to the growth of free and open-source LLMs. Businesses, researchers, and individual creators can now access powerful models with complete control over data and deployment, eliminating the need for costly APIs.

- For businesses, LLaMA 3 and Mixtral provide state-of-the-art performance.

- For individuals and startups, Mistral 7B and Phi-3 offer cost-effective, lightweight solutions.

- Command R+, Falcon, and Gemma 2 each have special advantages for particular use cases.

These models are catching up to and sometimes even surpassing proprietary offerings as the open-source ecosystem develops. Your intended use case, scale, and budget will all influence your choice.

At tooljunction, we share honest AI tool reviews and tutorials to help you choose the right tools for your business.