In 2025, LLM APIs for developers have become essential tools for building AI-powered applications without the need to train massive models from scratch. Through straightforward API calls, these APIs provide you with access to top-notch language generation and understanding, whether you're building a chatbot, automating content, or integrating intelligent search into your application. Using hosted LLMs such as GPT, Claude, Cohere, Mistral, or Gemini allows developers to concentrate on creating novel features while letting the API provider handle the laborious tasks of infrastructure, optimization, and scaling. We'll examine the best LLM APIs for developers in this guide, contrasting features, costs, and applications to assist you in selecting the best option for your projects.

Table of Contents

What Makes a Good LLM API

Developers should consider more than just raw model quality when deciding which LLM API to use. A trustworthy API offers features that facilitate seamless integration while striking a balance between cost, scalability, and performance.

- The model's ability to handle complex queries is determined by its accuracy and reasoning skills.

- Scalability and latency are important factors for large-scale deployments and real-time applications.

- As workloads increase, pricing and token efficiency have an impact on long-term viability.

- Customization and fine-tuning enable developers to modify models to fit particular sectors or fields.

- Safety and dependability guarantee that sensitive content is handled appropriately by the API.

- Support safety and reliability for ecosystems consists of SDKs, documentation, and developer tool integration.

Why LLM APIs for Developers Need

Because language model APIs remove the burden of training and maintaining large models, developers use them. Teams can connect to a hosted API and begin building right away, eliminating the need to handle infrastructure, manage GPUs, or retrain models for each new use case. This reduces the entry barrier, speeds up time to market, and offers developers flexibility by letting them select the API that best suits their needs in terms of workload, budget, and product objectives. To put it briefly, just as cloud services made enterprise infrastructure available ten years ago, LLM APIs make advanced AI accessible.

Quick LLM APIs for Developers Comparison

| API | Strengths | Pricing (2025) | Best For |

|---|---|---|---|

| GPT (OpenAI) | Best reasoning, multimodal support | $5 input / $15 output per 1M tokens | General-purpose apps, coding tools |

| Claude (Anthropic) | Long context, safety-first AI | $3 input / $15 output per 1M tokens | Enterprise, compliance-heavy apps |

| Cohere | Strong embeddings, multilingual | $1.5–$3 per 1M tokens | Search, RAG, multilingual systems |

| Mistral | Open-source + affordable | ~$1 per 1M tokens | Custom hosting, startups, edge use |

| Gemini (Google) | Google Cloud integration, multimodal | $1.25 input / $5 output per 1M tokens | Cloud-native, enterprise scale |

Here Are 5 Best LLM APIs for Developers

These LLM tools offer strong language understanding, generation, and reasoning capabilities that assist developers in creating AI-powered applications. Developers can select the best API for their projects, whether they involve chatbots, content automation, or sophisticated search, because each one has its own features, costs, and advantages.

1. GPT API (OpenAI)

The OpenAI GPT API continues to be the most widely adopted service, powering ChatGPT, Microsoft Copilot, and a broad ecosystem of developer-built tools.

Features

- Models: GPT-4o for multimodal reasoning, GPT-4 Turbo for balanced performance, GPT-3.5 for cost-effective scaling.

- Multimodal Support: Works with text and images, enabling richer use cases.

- Function Calling: Lets apps trigger external tools or APIs through the model.

- Fine-tuning: Customizes smaller models for domain-specific applications.

Pricing (2025 Snapshot)

- GPT-4o: Around $5 per million input tokens, $15 per million output tokens.

- GPT-3.5: Much cheaper at $0.50 per million tokens.

Best Use Cases

- Conversational agents and chatbots.

- Code generation and debugging workflows.

- Summarization and structured content drafting.

- RAG pipelines with embeddings + vector stores.

Pros

- Best-in-class reasoning ability and broad versatility.

- Strong developer ecosystem, documentation, and tooling.

Cons

- Higher costs compared to Claude, Cohere, or Mistral.

- Latency can increase under heavy demand.

2. Claude API (Anthropic)

The Claude API from Anthropic has grown popular for enterprise applications thanks to its safety-first design and extremely large context windows.

Features

- Models: Claude 3.5 Sonnet for balanced performance, Claude 3 Opus for deeper reasoning.

- Large Context Windows: Up to 200k tokens, excellent for analyzing lengthy documents.

- Safety Guardrails: Designed with constitutional AI principles for more controlled outputs.

- Multi-turn Chat Support: Handles longer conversations effectively.

Pricing

- Claude 3.5 Sonnet: Around $3 per million input tokens, $15 per million output tokens.

- Competitive pricing for workloads that require long context handling.

Best Use Cases

- Enterprise document analysis and summarization.

- Legal, healthcare, and compliance-heavy industries.

- Customer service bots where safety is critical.

Pros

- Reliable safety mechanisms and trustworthiness.

- Handles very long inputs without breaking context.

Cons

- Slightly weaker coding support compared to GPT.

- Smaller ecosystem and fewer integrations than OpenAI.

3. Cohere API

Cohere focuses on practical NLP tools for developers, with strong embeddings and multilingual capabilities.

Features

- Command R+ models: Optimized for RAG and structured outputs.

- Embeddings API: High-performing embeddings for semantic search.

- Multilingual Support: Works across 100+ languages.

- Enterprise Deployment: Options for private hosting.

Pricing

- Free tier with monthly credits.

- Paid tiers around $1.50–$3 per million tokens.

Best Use Cases

- Search engines and semantic retrieval.

- Enterprise knowledge management.

- Multilingual applications and chatbots.

Pros

- Affordable, with a generous free tier.

- Strong embeddings API for RAG systems.

Cons

- Less general-purpose than GPT or Claude.

- Limited multimodal capabilities.

4. Mistral API

Mistral AI stands out by providing open-source models alongside a hosted API, giving developers more flexibility.

Features

- Models: Includes Mistral 7B and Mixtral (MoE) for efficient performance.

- Open-Source Weights: Available for self-hosting and customization.

- Efficient Architecture: Designed for speed and lower resource use.

Pricing

- Hosted API as low as $1 per million tokens.

- Free options available through open-source deployment.

Best Use Cases

- Developers who need transparency and control.

- Startups avoiding vendor lock-in.

- Edge or on-premise deployments.

Pros

- Lower costs and flexible deployment options.

- Open-source community support.

Cons

- Smaller ecosystem compared to larger providers.

- Requires more ML expertise to fine-tune.

5. Gemini API (Google DeepMind)

Google’s Gemini API builds on its earlier PaLM models, now tightly integrated into the Google Cloud ecosystem.

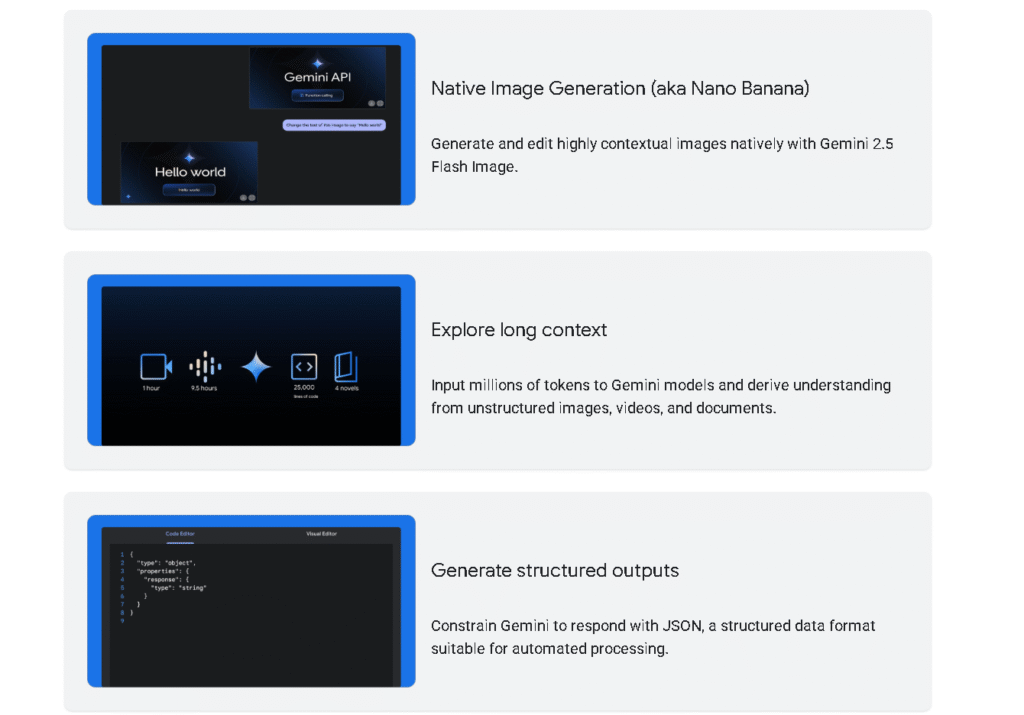

Features

- Models: Gemini 2.5 Pro and lighter versions.

- Multimodal Support: Works with text, code, and images.

- Context-Aware Pricing: Pay only for tokens actually processed.

- Cloud Integration: Deeply connected to Google’s AI stack.

Pricing

- Starts at about $1.25 per million input tokens.

- Enterprise bundles available through Google Cloud.

Best Use Cases

- Apps built natively on Google Cloud.

- Multimodal enterprise solutions.

- Scalable global applications.

Pros

- Competitive pricing compared to GPT and Claude.

- Tight integration with Google’s infrastructure.

Cons

- Documentation and developer resources less polished.

- Limited rollout in certain regions.

LLM APIs Pricing Comparison (2025)

| Provider | Model | Input (per 1M tokens) | Output (per 1M tokens) | Context Window | Fine-tuning | Free Tier |

|---|---|---|---|---|---|---|

| OpenAI | GPT-4o | $5.00 | $15.00 | 128k | Yes | Yes |

| Anthropic | Claude 3.5 Sonnet | $3.00 | $15.00 | 200k | No | Yes |

| Cohere | Command R+ | $1.50–$3.00 | $3.00–$6.00 | 128k | Yes | Yes |

| Mistral | Mixtral | ~$1.00 | ~$2.00 | 32k–64k | Yes | Yes |

| Gemini 2.5 Pro | $1.25 | $5.00 | 128k | Yes | Yes |

How to Choose the Right LLM APIs for Developers

- For cost-effective scaling, startups on a tight budget might favor Cohere or Mistral.

- Claude might be a safer choice for enterprise developers working in compliance-heavy fields.

- GPT will continue to be most useful for general-purpose and coding-focused applications.

- Gemini might be the most seamless option for teams that are Google Cloud native.

Start by calculating how many tokens you anticipate using, testing free tiers, and determining whether multimodal support or fine-tuning is necessary.

FAQ: LLM APIs for Developers

1. What is an LLM API?

An LLM API is a hosted service that allows developers to use large language models like GPT, Claude, or Cohere through simple API calls, without needing to train or maintain the models themselves.

2. Which is the best LLM API in 2025?

The best LLM API depends on your needs. GPT (OpenAI) is strongest for reasoning and code generation, Claude (Anthropic) excels in safety and long context, Cohere is best for embeddings and multilingual tasks, Mistral is affordable and open-source friendly, and Gemini (Google) integrates seamlessly with Google Cloud.

3. How much does it cost to use an LLM API?

Pricing varies by provider. In 2025, OpenAI’s GPT-4o costs about $5 per million input tokens, Claude 3.5 costs around $3 per million input tokens, Cohere ranges between $1.50–$3, Mistral is about $1, and Gemini starts at $1.25 per million tokens. Many providers also offer free LLM API tiers for developers.

4. Can I use LLM APIs for free?

Yes, several providers offer free LLM APIs for developers to experiment with. Cohere, Mistral, and OpenAI all provide limited free credits each month so you can prototype without upfront cost.

5. How do I integrate an LLM API into my application?

You typically start by signing up with a provider, generating an API key, and then calling the API via REST or SDK. For example, to integrate the GPT API, developers send a request with a prompt and receive a generated response. Tutorials for each provider cover authentication, token usage, and best practices for performance.

6. What factors should I compare when choosing an LLM API?

Key factors include reasoning accuracy, latency, pricing, context window size, safety features, and whether the provider supports fine-tuning or multimodality. Developers often run small-scale pilots across multiple APIs before committing.

Conclusion

The top LLM APIs for developers in 2025 will be determined solely by the priorities of your project. Cohere offers reasonably priced NLP-first tools, Mistral offers open-source flexibility, Gemini offers robust performance for Google Cloud-native apps, Claude is unparalleled for safety and long-context use cases, and GPT continues to be the best for general reasoning and versatility.

Model training from scratch is no longer a concern for developers. Alternatively, they can concentrate on incorporating the appropriate API to enable the rapid, effective, and large-scale implementation of their AI-powered applications.

At tooljunction, we share honest AI tool reviews and tutorials to help you choose the right tools for your business.